Dec 13, 2024

14 UI Patterns for generative AI

Co-Founder of Intercom

* Originally posted by Des Traynor on Twitter and he agreed to share here too — if you have suggestions please share them there!

I've yet to see a good thread summarising new types of UI that generative AI has necessitated, so here's an attempt to start one (in the hopes you all can contribute!) Not necessarily endorsing any of these, I just see them all as relevant in our future…

👉 We'll need UI for taking big messy inputs of text (or audio) and visualising the actions taken as a result, here's one example by @Day_ai_app /cc @markitecht

👉 As we use probabilistic technology to build deterministic work flows (and vice versa) we'll want to show any broken logic.

👉 These workflows will need to visualise their now probabilistic triggers and steps taken for debugging/analysis (especially if generated *by* AI, along with being powered by AI) Here's @attio with some nice touches.

👉 When generating answers to questions, we'll need to visualise where they came from so they can be verified/fixed. Here's @intercom Copilot

👉 "Just type" UIs will be much cleaner/beautiful to look at, here's @mymind showing that pretty clearly with gorgeous results

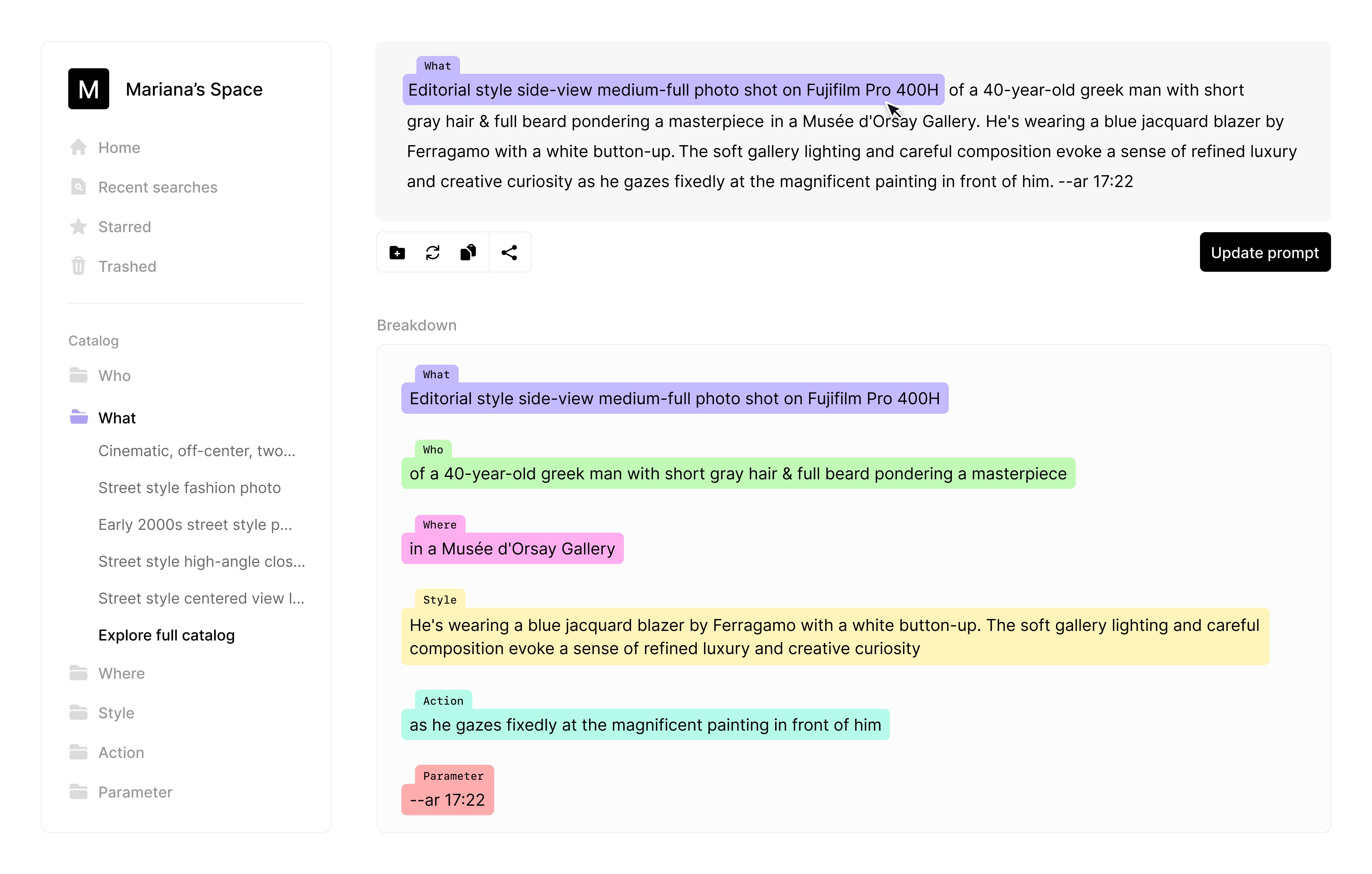

👉 We're going to be working with texts & prompts quite a bit and we'll need ways to visualise which snippets are relevant for what purposes, here's a nice mock by @mrncst

👉 I suspect "visual prompting" will become an input mechanism in UI (often a sketch is just way easier than a description of a sketch)

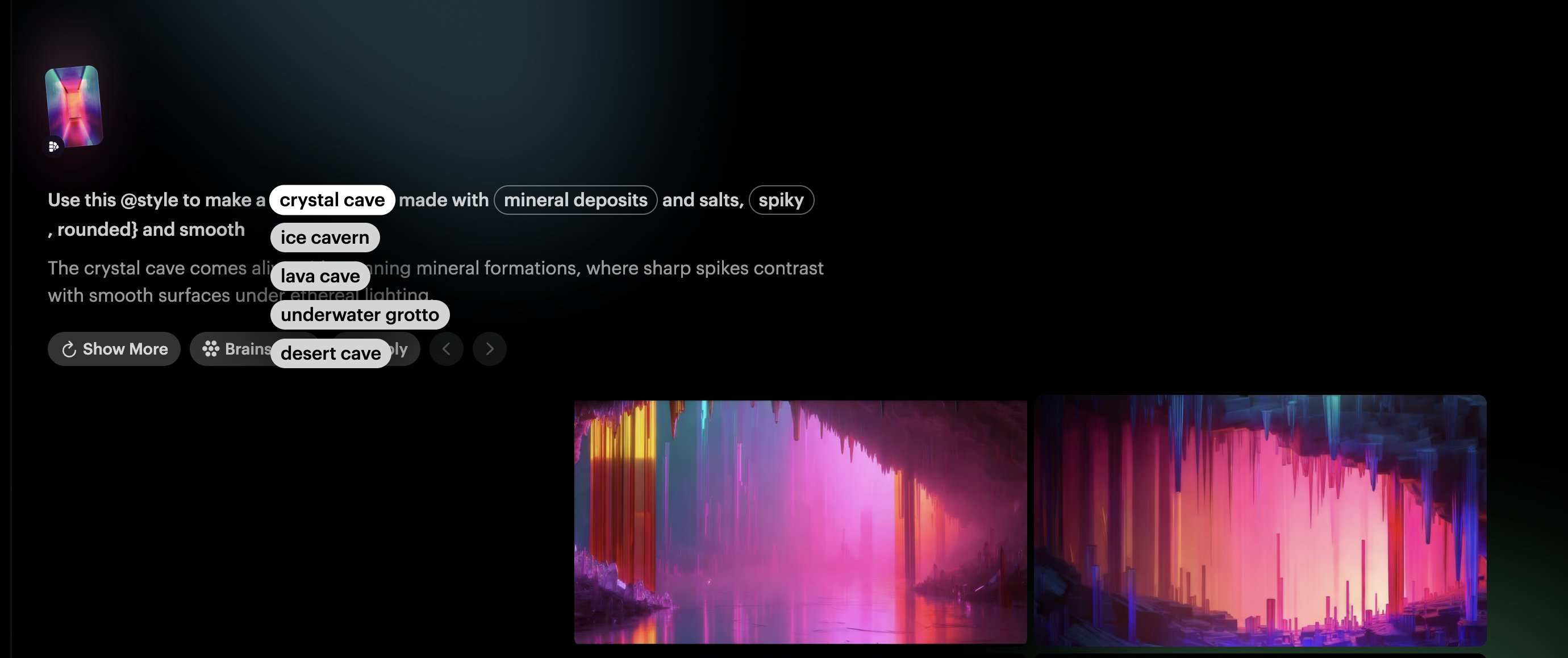

👉 We'll need richer inputs to guide users in their creations, and let them be more expressive. (show don't tell) This is nice from @msfeldstein

👉 This work @jsngr goes even further where a few strokes gives a visual direction of what you're looking for and it does the rest. A "visual copilot" if you will (and I will)

👉 We'll need more ingenuity to handle lots of text inputs. Here's a simple example of identify the "load bearing" words in a prompt and suggesting further manipulation (@LumaLabsAI via @aishashok14)

👉 This "color picker for words" is an absolute banger of a concept by @MatthewWSiu. This could be great for prompt tweaking (especially with real time responses/feedback)

👉 "Concept Clustering" by @runwayml is great, it decomposes the user's creation into its salient pieces and allow manipulation at the lower level.

👉 Going back to system/workflow design, here we see a system designed purely by expressing expected inputs and outputs (thus letting AI guide in creating the system itself) by @io_sammt.

👉 We will need to visualise AI created processes or algorithms, aka show your chain of thought. Here's a beautiful visualisation by @pranavmarla. The tricky prompt is deconstructed before your eyes into solvable atomic statements, easier for interrogation and adaptation.

💡 Remember: if you want to add ➜ click this link and go to the thread!